Granularity impact on visualization

- kyle Hailey

- May 22, 2020

- 2 min read

The longer the timeline we show in the graph, either the more points we show or the larger granularity we use.

For example if I show 1 hour of data, then I can show 60 points of 1 minute each granularity.

If I showed a day at 1 minute each then that would be 1440 points.

Say we wanted to limit the points to less than 300 points so our UI stays responsive no matter the time scale.

The for 24 hours I can show 5 minute granularity which is 288 points

If I show 25 hours that’s 300 points at 5 minute granularity so we are no longer under 300 points.

At this time I’d have to jump to the next level of granularity. In my case the next step up is 1 hour.

Using one hour granularity will look different than 5 minutes.

The following is roughly a day at 5 minute granularity first and 1 hour granularity second.

a

The most common affect of larger granularity is loosing the spikes i.e the 5 minute granularity could have highs and lows that get averaged out at larger granularity.

What is noticeable is that the 1 hour granularity above is that it is spiky. The highs and lows are roughly the same. The spikiness comes from the fact that the above graphs are using points. Each point represents the average value for the width of the granularity, but since we are using points, the actual width of the granularity is not visible. What would be more accurate is using bars for each “point” where the bar was the width of the granularity.

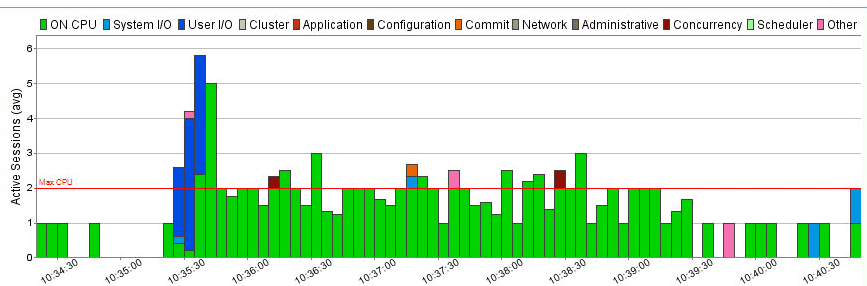

Here are a couple of examples from the tool DB Optimizer were the width of the bar represents the granularity:

Comments